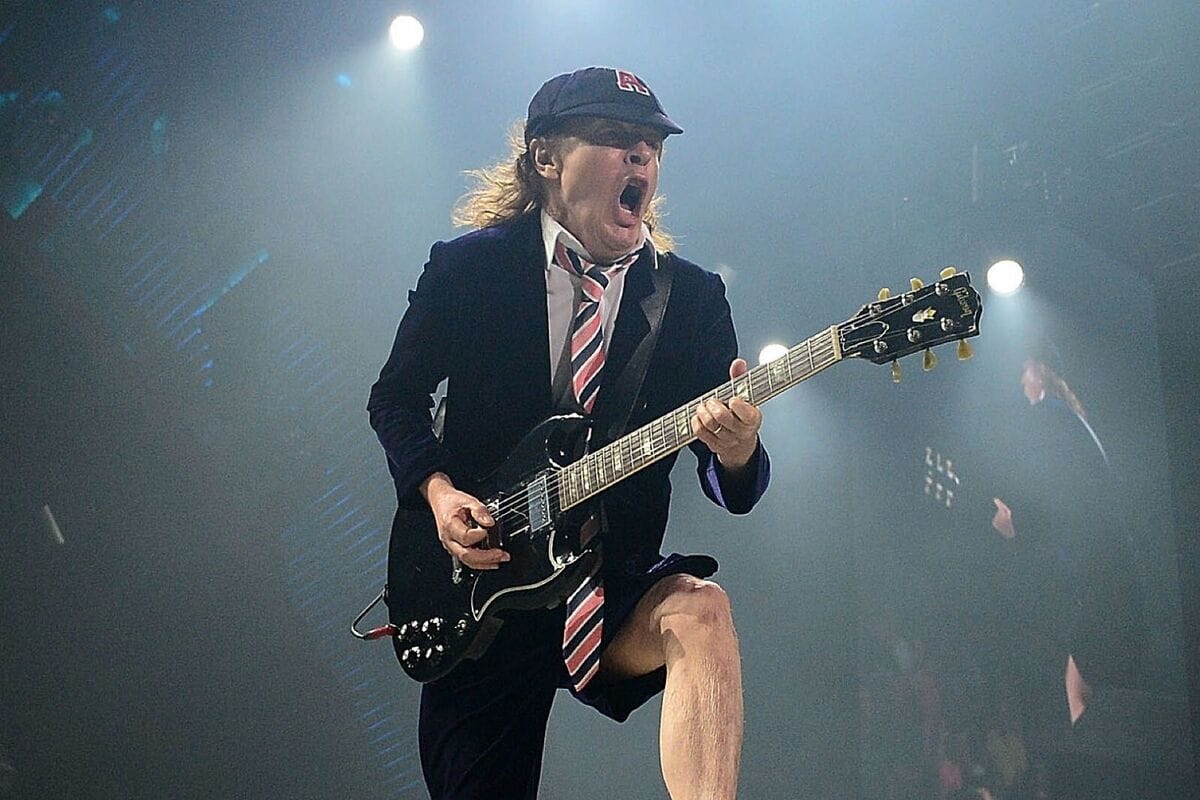

Thunderstruck or Highway to Hell?

Apple, it seems, isn't only focused on-device work when it comes to AI:

Apple has been working on its own chip designed to run artificial intelligence software in data center servers, a move that has the potential to give the company an advantage in the AI arms race.

Over the past decade, Apple has emerged as a leading player designing chips for iPhones, iPads, Apple Watch and Mac computers. The server project, which is internally code-named Project ACDC—for Apple Chips in Data Center—will bring this talent to bear for the company’s servers, according to people familiar with the matter.

Project ACDC has been in the works for several years and it is uncertain when the new chip will be unveiled, if ever. Apple has promised many new AI products and announcements at its Worldwide Developer Conference in June.

This is hardly a surprise, since Apple famously wants to own "the entire stack" and do as much as possible without relying on third parties. The surprise, perhaps, is just the timing of this. Just last week, Apple CFO Luca Maestri was asked about any potential changes in CapEx due to AI – something Wall Street is watching closely with all the tech players right now, of course. His answer, while not direct, was a pretty clear indication that Apple would be partnering on AI in data centers, just as they've done with regard to the cloud. As I wrote last week:

This is Maestri saying that Apple won't need to spend as much on CapEx because they're not planning to build out and train LLMs exclusively on their own servers as many of their peers have. This echos Apple's cloud strategy in general, which has included using their own servers mixed with those from Amazon and others.

But reading a little more between these lines, it also may suggest that Apple, as rumored, plans to outsource some of their AI work to partners in their own clouds, while Apple itself focuses most of their AI work to what they can do on-device. Obviously, this will shift over time depending how things evolve with AI, but it will be interesting to see what Wall Street thinks about this hybrid approach. I suspect they'll like it – if not now, at the point when they decide that all this AI build-out spend has been excessive. Much as the ramp in content spend by the streaming players was rewarded at first before it was used against them.

Again, this "shift over time" may be happening a lot sooner. Mark Gurman at Bloomberg followed up with a report yesterday on the initiative (thankfully confirming the excellent "ACDC" codename):

Apple Inc. will deliver some of its upcoming artificial intelligence features this year via data centers equipped with its own in-house processors, part of a sweeping effort to infuse its devices with AI capabilities.

The company is placing high-end chips — similar to ones it designed for the Mac — in cloud-computing servers designed to process the most advanced AI tasks coming to Apple devices, according to people familiar with the matter. Simpler AI-related features will be processed directly on iPhones, iPads and Macs, said the people, who asked not to be identified because the plan is still under wraps.

"This year", with Gurman going on to note that these servers will be running on Apple's M2 Ultra chips at first, before eventually shifting over to the M4 variety, a transition which started this week with the iPad Pro.

Relatively simple AI tasks — like providing users a summary of their missed iPhone notifications or incoming text messages — could be handled by the chips inside of Apple devices. More complicated jobs, such as generating images or summarizing lengthy news articles and creating long-form responses in emails, would likely require the cloud-based approach — as would an upgraded version of Apple’s Siri voice assistant.

It will be interesting how Apple is able to handle this from a product perspective. One nice part of "outsourcing" any cloud AI work is that it would have undoubtedly made Apple's on-device AI work seem insanely fast versus the work being done in the cloud. But if Apple is now going to do some of that work itself, will they try to set expectations for the differences? Even more gnarly:

Handling AI features on devices will still be a big part of Apple’s AI strategy. But some of those capabilities will require its most recent chips, such as the A18 launched in last year’s iPhone and the M4 chip that debuted in the iPad Pro earlier this week. Those processors include significant upgrades to the so-called neural engine, the part of the chip that handles AI tasks.

In other words, certain AI tasks will require the newest iPhones/iPads. How they'll surface that and set customer expectations around that will be fascinating to watch. The good news (for Apple) is that it will give people a tangible reason to upgrade to the latest devices. The bad news is that it will create more of a class divide between models.

Back to WSJ:

For Apple’s server chip, the component will likely be focused on running AI models—what’s known as inference—rather than on training AI models, where chip maker Nvidia will likely continue to dominate, according to some of the people.

Again, this makes sense and helps explain Maestri's comments at a more granular level. It might be as simple as:

Apple prefers that most uses of AI happen on an iPhone or Apple Watch, but it still needs to run some processes on remote servers accessed over the internet, which is when the Apple server chip would take over. By handling more of those tasks itself, even with the chips in the data center, Apple can have more control of its AI destiny.

Putting this all together, and assuming these reports are accurate, Apple's AI strategy would seem to be (with a number of questions still, of course):

- "Simple" AI tasks: run on-device on all newer (iOS 18-compatible) iPhones/iPads...

- "More Complex" AI tasks: run on-device on newest iPhones 17 (maybe 'Pro' models only or maybe not if they have the same A18 chip? or maybe it's RAM dependent?) and iPads Pro...

- "Complicated" AI tasks: run on-cloud on Apple's servers (if an iPhone/iPad isn't powerful enough to run something "more complex" per above does Apple send it to the cloud?)...

- Certain "Generative" AI tasks (such as chatbots): run via third-party services on their servers (presumably)...

Again, the real challenge will be making this all feel seamless to an end-user. I'm sure Apple doesn't want a lot of "I'm sorry Dave, I'm afraid I can't do that."