'OpenAI Original'

There is a lot in here. But at the highest level, this is OpenAI co-founder Ilya Sutskever's new company:

Now Sutskever is introducing that project, a venture called Safe Superintelligence Inc. aiming to create a safe, powerful artificial intelligence system within a pure research organization that has no near-term intention of selling AI products or services. In other words, he’s attempting to continue his work without many of the distractions that rivals such as OpenAI, Google and Anthropic face. “This company is special in that its first product will be the safe superintelligence, and it will not do anything else up until then,” Sutskever says in an exclusive interview about his plans. “It will be fully insulated from the outside pressures of having to deal with a large and complicated product and having to be stuck in a competitive rat race.”

I'm reminded of Coca-Cola Classic. That is, the branding the soda company had to go with after the 'New Coke' debacle in the 1980s to let everyone know that the original product they knew and loved was back. 'Safe Superintelligence' sounds a lot like 'OpenAI Original'.1 Ashlee Vance, who got the scoop on the new initiative, seems to agree:

Safe Superintelligence is in some ways a throwback to the original OpenAI concept: a research organization trying to build an artificial general intelligence that could equal and perhaps surpass humans on many tasks. But OpenAI’s structure evolved as the need to raise vast sums of money for computing power became evident. This led to the company’s tight partnership with Microsoft Corp. and contributed to its push to make revenue-generating products. All of the major AI players face a version of the same conundrum, needing to pay for ever-expanding computational demands as AI models continue to grow in size at exponential rates.

As everyone is well aware at this point, Sutskever was part of the attempted coup at OpenAI as one of the board members that ousted CEO Sam Altman. But then in the truly bizarre days that followed, he apparently changed his mind – or was convinced to change his mind – and welcomed Altman back. Though interestingly, Sutskever, unlike Altman, was not welcomed back to the board after the fiasco. And all-the-right-things-said™ aside, he was basically nowhere to be found within the company. And soon he was no longer found at all within the company.

Upon leaving, again, despite all-the-right-things-said™, it was a fairly awkward departure where he was stated to be going to work on something he was truly passionate about. This seemed to make it intentionally sound like he wasn't going to be working in the AI field any longer. And certainly not on something that competes with OpenAI. But of course he was going to work in AI, this is the space where he's arguably the best in the world at what he does. You don't just give that up and walk away. Certainly not right now! And certainly not if you're a true believer in the need for all of this to be done safely, as Sutskever clearly is!

And so I guess you could argue that the new company isn't technically competing with OpenAI, but only because the Safe Superintelligence team presumably believes that OpenAI is clearly not doing what they aim to do from a safety perspective. They were perhaps doing that at one point with Sutskever and his co-founder and also former OpenAI colleague Daniel Levy, but now the focus is elsewhere. Perhaps still towards the goal of AGI, which this new company clearly wants to brand as "Superintelligence", but more from a product-perspective.

Safe Superintelligence makes their mandate pretty clear on their spartan website:

Our singular focus means no distraction by management overhead or product cycles, and our business model means safety, security, and progress are all insulated from short-term commercial pressures.

In other words, if anything, OpenAI should perhaps be called 'Unsafe Superintelligence', from their vantage point.

And just like that we have an exciting new chaotic wrinkle in the AI space, yet again. I'm not sure we've reached the limit of companies aiming for the same basic thing in slightly different ways, but surely we must be close to it. Right? Right?! The good news, I guess, is that all of these startups will push one another. The completely unknown news is... towards what, exactly?

I can't wait to hear how Elon Musk is going to try to stir this stew. Presumably, he'll be um, aligned with Safe Superintelligence more than OpenAI, the company he also co-founded with Sutskever and Altman, but was also effectively ousted from and was until very recently suing for straying from their original goals.2 So yes, he's clearly not going to side with OpenAI, but may side with 'OpenAI Original', as it were. But, of course, he has his own play here now with xAI which he might deem to be the one true 'OpenAI Original'.

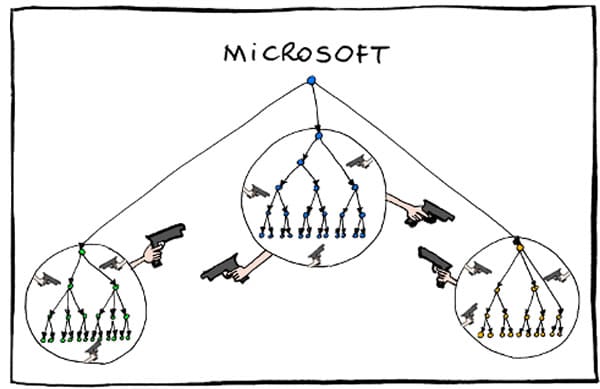

And what must Microsoft think of all this? An OpenAI co-founder now founding another AI company that is not OpenAI. But maybe they're fine with that given that this co-founder was the key cog that led to the shitshow they had to step in to fix. And clearly, Sutskever was going to be a pain to them as they kept going down the commercialization path. But they may now be going down that path with or without OpenAI anyway. And OpenAI is sort of going down that path with Apple. It's all very Microsoft org-chart.

Also as Vance points out:

Sutskever has two co-founders. One is investor and former Apple Inc. AI lead Daniel Gross, who’s gained attention by backing a number of high-profile AI startups, including Keen Technologies. (Started by John Carmack, the famed coder, video game pioneer and recent virtual-reality guru at Meta Platforms Inc., Keen is trying to develop an artificial general intelligence based on unconventional programming techniques.)

Gross is arguably the most active investor in AI at the moment. Maybe other than Altman. Or Microsoft. The race is officially on to scale "no conflict, no interest".

This fascination with Sutskever’s plans only grew after the drama at OpenAI late last year. He still declines to say much about it. Asked about his relationship with Altman, Sutskever says only that “it’s good,” and he says Altman knows about the new venture “in broad strokes.” Of his experience over the last several months he adds, “It’s very strange. It’s very strange. I don’t know if I can give a much better answer than that.”

"It's very strange. It's very strange."

1 I guess I don't absolutely hate the branding as it's straightforward: Safe Superintelligence. But it also doesn't sound super serious. I keep being reminded of "Superblood Wolfmoon" for some reason. But perhaps that's just because I'm going to see Pearl Jam soon. It's also confusing enough that Vance linked to the wrong URL -- it's a '.inc' not '.com' -- in his story.

2 He's also long been said to be the one who originally recruited Sutskever out of Google to join OpenAI, back in the day.