The Overwhelming Chaos of AI is Underwhelming

The current state of AI is a mess. Not necessarily the technology, though that's a part of it, but more the overall narrative around it. Depending on who you talk to, you'll either get hyperventilating responses about how it's about to change everything, or hyperventilating responses about how it's going to ruin everything. There's no nuance here. Perhaps "Web3" primed this polarization pump. Regardless, AI is now pouring gasoline over everything. And everyone has a match.

Late last week, Steven Levy published a post entitled "It's Time to Believe the AI Hype". It's a good takedown/rebuttal of of an utterly ridiculous NYT Op-Ed by Julia Angwin. Levy notes that her "Press Pause on the Silicon Valley Hype Machine" could well end up being this generation's "Why the Web Won't Be Nirvana", where Clifford Stoll made the 1995 case for the internet being a passing fad. I'm partial to John Dvorak's 2007 magnum opus "Apple should pull the plug on the iPhone", but it's all the same general hyperbolic take in the face of endless hyperbole on the other side that "this changes everything". But sometimes, just sometimes, mind you, as Levy points out, technology does change everything.

I must repeat: I admire Angwin as a tech-savvy investigative reporter. But I’m baffled that she attempts to debunk AI because of an analysis that allegedly shows that GPT-4 can’t really ace the Uniform Bar Exam with a score in the 90th percentile, as OpenAI claims. She found one researcher who says that the chatbot passed the bar by placing only as well as 48 percent of human beings who spent three years in classes and several months studying 24/7 to successfully pass the test. That’s like the story where someone takes a friend to a comedy club to see a talking dog. The canine comic does a short set with perfect diction. But the friend isn’t impressed—“The jokes bombed!”

Folks, when dogs talk, we’re talking Biblical disruption. Do you think that future models will do worse on the law exams?

To me, it's sort of the airplane WiFi effect: we have access to all the world's information and can chat with anyone while traveling at 35,000 feet and moving 500 mph through thin air – and it's often the most frustrating experience ever. In our age of relentless wonders, you can quickly lose sight, and the plot.

The plot, in this case, is indeed that technology improves. And it's usually not one thing that drives the improvement, but many things in concert. And that's why the pace of improvement often accelerates. AI is the best example of this yet. The problem in this field right now, as I see it, is almost the opposite one. It's all improving too fast for anyone to have a good enough grasp of the current state of the art to actually make compelling use-cases (and products) that will last beyond a demo at worst and a few months at best. OpenAI has been able to productize better than anyone else to date, which is why I believe they're seeing the success they have. But they too are having their own hiccups along the way.

Said another way, we need the underlying bits of technology here to settle a bit before we can truly build the products that Angwin and others believe will never happen. I understand that to some, this looks like the "Web3" hype cycle – but it's a lazy comparison. Yes, they're both technology. So is a Furby and the iPhone. Plenty of us knew that cycle was bullshit. While there was some interesting technology underneath, it was largely driven by the ability to make a quick buck. And plenty of quick bucks were made! And some people went to jail as a result. It all led to the cycle burning out and crashing faster than it might have otherwise.

To be clear, there is an AI bubble forming here as well – certainly on the funding side of the equation. But you, as a consumer, shouldn't need to worry about that. Bubbles aren't always bad, and they tend to naturally form in such super cycles. When they burst, what's real is what's left. And we're all left with some technological innovation that was perhaps ahead of its skis. And new players figure out how to take advantage of what was built out and left behind.

Rinse, repeat.

Anyway, now I'm ahead of my skis. The other element that is confusing people about AI right now is that it's such a nebulous term. It means a lot of different things. And so while I actually agree that we may be nearing a point where one subset of AI, chatbots, hit a sort of logical wall in terms of improvements, it's going to lead to other elements of AI springing up to get around such walls. It has actually already been happening, and it's part of why you're now starting to hear non-stop talk about "agents". And now OpenAI's GPT-4o (as well as Google's "Astra") seem like they've sprung from these blockades.

As an outside observer, it almost feels as if AI is evolving as if an organic entity might. I don't mean this to be some sort of creepy or messianic or apocalyptic statement! It's just sort of fascinating how pieces of technology seem to evolve from different companies and projects almost in tandem. Image generation. Chatbots. Video generation. Music generation. Vocal computing. Obviously some of this is driven by competition. But I don't believe all of it is. It's like when Hollywood studios decided to make movies about volcanoes or asteroids at the exact same time.

Okay, it's not really like that. But you get the point.

All of this leads to AGI. Increasingly, I'm not sure it's not just a distraction from what is actually going on here. Said another way, we can have 'AI' without the 'G' and we already do. I'm not entirely sure we need the 'G' or that we'll ever fully see the 'G'. Part of that problem is one of definition – every person seems to have a different definition for what AGI is – and thus, no one actually agrees what AGI is. So with that in mind, of course some people will never believe we've achieved AGI. Others think we already have. This argument will never end.

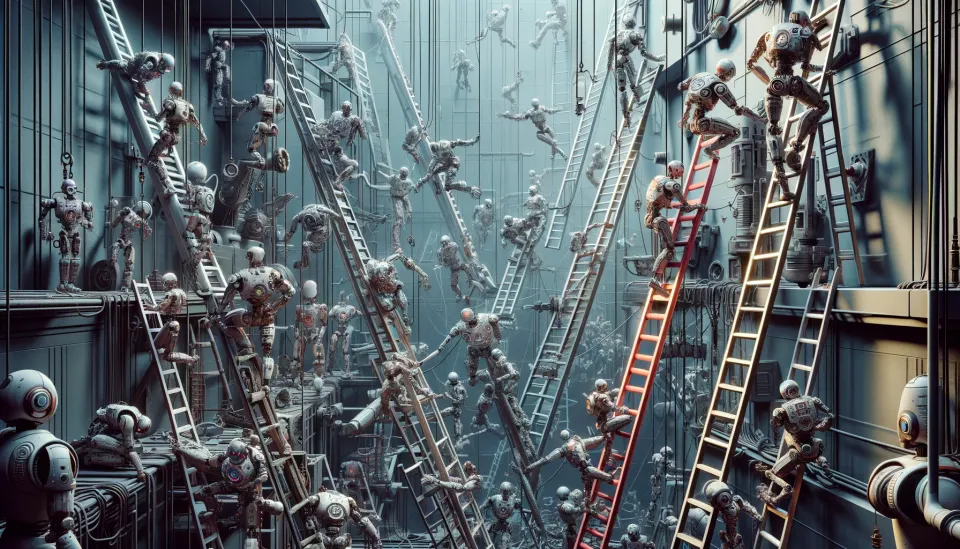

Again, my point is that maybe it doesn't matter. The "AI revolution" is happening. Play has been pressed! Pause will not be. At some point, the underlying technology will stabilize in the form of true platforms on which to build. Things will calm down, which will be both good and needed. But that's not happening yet, and so it all feels chaotic to some and at the same time, underwhelming to others. It's all just changing too fast to plant product flags. It's like trying to drink a glass of water if you're sitting on that airplane. On the outside wing of that airplane. Eventually, we'll work our way on board. And start complaining about the WiFi.

Thanks for reading, if you enjoyed this, perhaps:

🍺 Buy Me a Pint

🍺🍺 Buy Me 2 Pints (a month)

🍻 Buy Me 20 Pints (a year)