o3, o4-mini, o4-mini-high, o My

I know that OpenAI knows that this naming scheme is ridiculous. No less than Sam Altman has tried to front-run everyone making fun of this. And I know that they're working on fixing it. But still, it simply must be made fun of, especially since all of the various AI model companies have fallen into this same general trap to varying degrees. This can never happen again.

And I don't even have to make jokes here. I just have to type it out to see if I actually can wrap my head around OpenAI's naming scheme. And my own chain-of-thought, as it were, will be the actual joke.

Okay, so we now have o3 and o4-mini. They both sound great on paper – in particular, o3 seems impressive. To the point where some are whispering "AGI". I, for one, will not believe we have achieved AGI until an AI can fully explain this naming structure. And... we're not there yet.

Anyway, o3 replaces o1. Where's o2? That, it seems, is a casualty of IP rights. OpenAI, it seems, didn't want to go down the path Steve Jobs once tried in going after a European telecom company. Meanwhile, o4-mini replaces o3-mini, which was just released in January. As the name implies, it's a smaller model, but also seemingly a generation beyond o3, as the naming scheme suggests. But per OpenAI's own tests, o3 still out-performs o4-mini on a range of vectors. So yes, at a high level, o3 is better than o4-mini. And in general, I'm okay with having a "mini" variation of a model as it clearly will be able to operate faster and smaller. It would have been much cleaner to have o3 and o3-mini as options at the same time, but alas, the model gods did not align here.

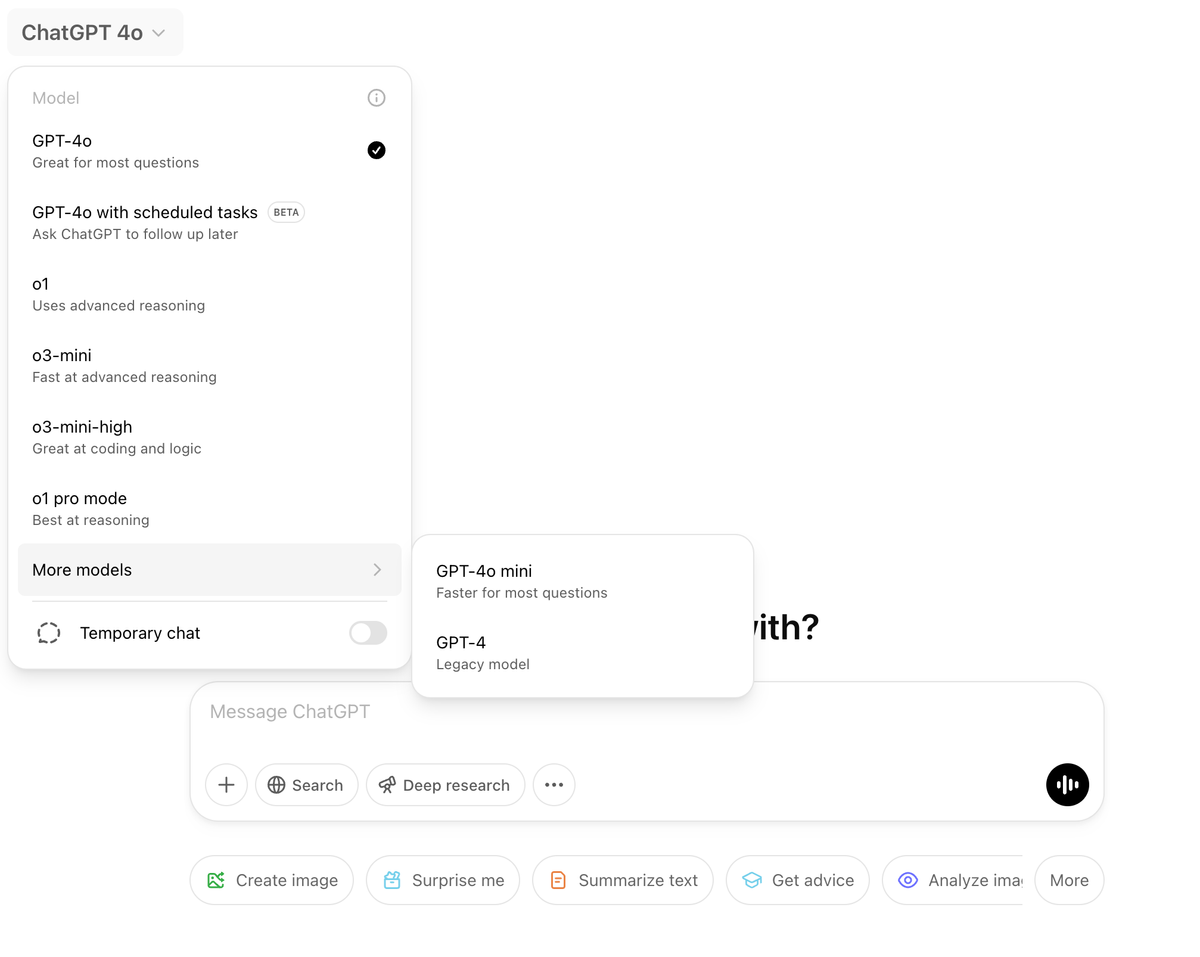

Where things start to get really gnarly is the fact that ChatGPT also has added an 04-mini-high in the drop down. The company doesn't even bother to explain this one in their blog post, they just include it in some of the benchmarks and mention that it will now be an option. This is not the first "high" model, of course. And presumably if you're going to use it, you know what it means and does. But 99% of users will have no such knowledge or context. All you get is the one-line description in the drop-down: "great at coding and visual reasoning". But that's also what OpenAI just wrote that o3 and o4-mini were great at!

The good news is that GPT-4o remains the "main" model for most users. Why we still need an option for GPT-4o with scheduled tasks, I don't know. Nor could I tell you when you should use this. It's the to-do list thing. But it clearly should just be a part of GPT-4o, maybe with a toggle, if you must? I'm sure there's a rationale here, but I don't want to hear it.

Also still available in the dreaded 'More models' sub-drop-down: GPT-4o mini. Again, we've been over the idea of "mini", which is fine at a high level. But how many users are realistically using this now, especially with it shoved into that sub-menu? This is clearly the way OpenAI subtly (and slowly) winds down older models, but it's no less silly. Though not quite as silly has having GPT-4 still here as an option as well. But don't worry, as it says in the description, that one is leaving in two weeks. Get your GPT-4 fix while it lasts, I guess.

Okay, so yes, GPT-4o remains the main model to use for most tasks. But don't get confused in thinking that GPT-4o and o4-mini are related – let alone o4-mini-high. Despite both having '4s' and 'os', they're completely different, you see. Here, the 'mini' may actually help in this way. Imagine if/when o4 is ready to roll and if GPT-4o is still around. Now that will be confusing.

Again, OpenAI is saying this naming clusterfuck will be resolved by then. And presumably they're waiting on GPT-5 to unify such things. But they've also been waiting for a while, which is clearly why they just released o3 and o4-mini (and 04-mini-high), even though Altman previously said they were not going to do that. Personally, I still wish they would use their cute fruit nicknames for such models – Anthropic is better in that regard – but that idea never quite ripened, apparently.

As the world waits for GPT-5, earlier in the week we got GPT-4.1. Of course, you can't actually use it yet within ChatGPT itself, only via the API. And oh boy the naming scheme goes to the next level here in terms of confusion.

Alongside GPT-4.1 we got (well again, the API got) GPT-4.1 mini and GPT-4.1 nano. Unlike, say, o4-mini, there is no dash between 'GPT-4.1' and 'mini'. Why? Nobody knows. I mean, there is a dash between 'GPT' and '4.1', so presumably this is just to fuck with people trying to write about these models, presumably. And why is there no o4-nano? Presumably because o4-mini is small enough? But GPT-4.1 mini is not small enough. Or would it be o4 nano, without the dash? Impossible to speculate here.

Here's the real kick in the head: GPT-4.1 is replacing GPT-4.5 Preview in the API. Yes, the lower numbered GPT is replacing the higher-numbered GPT. Why? Presumably because GPT-4.5 Preview is so expensive to run and operate. But OpenAI also says that GPT-4.1 actually outperforms GPT-4.5 Preview in ways. And so they're giving GPT-4.5 Preview three months to live in the API.

By the way, why is 'Preview' capitalized when 'mini' and 'nano' are not? Nobody knows. Certainly not Apple!

Not to worry though, GPT-4.5 Preview will continue to live on ChatGPT itself, presumably until GPT-5 is ready to roll. But actually, GPT-4.1 may replace GPT-4.5 Preview there as well, but there's no timetable there. Again, it all may depend on when GPT-5 is ready. So yes, we may have a replay of this confusing situation where GPT-4.1 replaces GPT-4.5 Preview – perhaps before it even has a chance to lose the 'Preview'! The "emo" model is likely to be emo about that...

And that presumes that GPT-4.1 is the heir apparent to GPT-4o, at least until GPT-5 arrives. Again, everyone assumed that GPT-4.5 would be the hold-over, but now it seems like GPT-4.1 might be. Yes, even though GPT-4o has the coveted 'o' and GPT-4.1 does not. Or maybe they'll go with GPT-4.1o – but perhaps only if it has the reasoning chops that 'o' implies. But not enough reasoning chops to be an actual 'o' model like o3 or 04-mini or 04-mini-fast.

Are you having fun yet? My fingers are not due to having to hit backspace constantly to correct the names here because it's so fucking confusing.

Again, this is all just an exercise to make sure OpenAI does the right thing here when they're ready to clean up the naming scheme and perhaps unify the models. If o3 is smart enough to use the other myriad tools that OpenAI offers, it's smart enough to pick the right model for whatever you happen to type into the prompt box.

For the first time, our reasoning models can agentically use and combine every tool within ChatGPT—this includes searching the web, analyzing uploaded files and other data with Python, reasoning deeply about visual inputs, and even generating images. Critically, these models are trained to reason about when and how to use tools to produce detailed and thoughtful answers in the right output formats, typically in under a minute, to solve more complex problems. This allows them to tackle multi-faceted questions more effectively, a step toward a more agentic ChatGPT that can independently execute tasks on your behalf.

I just want a prompt box and I want ChatGPT to sort out what model to use behind the scenes based on what I'm asking for 99% of use cases. Give us the full chain-of-thought flow to instill trust that ChatGPT is smart enough to make the right call here. For the other 1%, there can be some sort of streamlined model picker. This will mainly be for power users, obviously. But with ChatGPT now going fully mainstream, this all clearly needs to happen pronto.