OpenAI Reasons ‘o1’ is a Better Name than ‘Strawberry’

Well, what was coming in “the next couple of weeks” ended up being “the next couple of days” — here’s Kylie Robison:

OpenAI is releasing a new model called o1, the first in a planned series of “reasoning” models that have been trained to answer more complex questions, faster than a human can. It’s being released alongside o1-mini, a smaller, cheaper version. And yes, if you’re steeped in AI rumors: this is, in fact, the extremely hyped Strawberry model.

Part of me wonders if the company shouldn’t have stuck with the “Strawberry” nickname — as Robison notes, the press really seemed to latch on to it. Even I was not immune. This recalls back in the day when OS X took to using their “big cat” nicknames as the official marketing names. That lives in to this day with macOS being named after places in California — even though all of the other Apple OSes use generic numbers — increasingly to my chagrin.

I like the idea that OpenAI models can have fruit names. Perhaps they worry this veers too close to Android’s dessert nicknames (which also seemed derived from the fun OS X names)? But it also helps to humanize the technology. ‘OpenAI Strawberry’ sounds a lot friendlier than ‘OpenAI o1’. Or, if we want to keep using ‘GPT’, who doesn’t pick, say, ‘GPT Blueberry’ versus ‘GPT-4o’?

Certainly, ‘o1’ is less problematic than ‘Q*’ for all the obvious conspiratorial internet reasons but it’s also awfully generic-sounding, though there was intention behind it:

Still, the company believes it represents a brand-new class of capabilities. It was named o1 to indicate “resetting the counter back to 1.”

“I’m gonna be honest: I think we’re terrible at naming, traditionally,” McGrew says. “So I hope this is the first step of newer, more sane names that better convey what we’re doing to the rest of the world.”

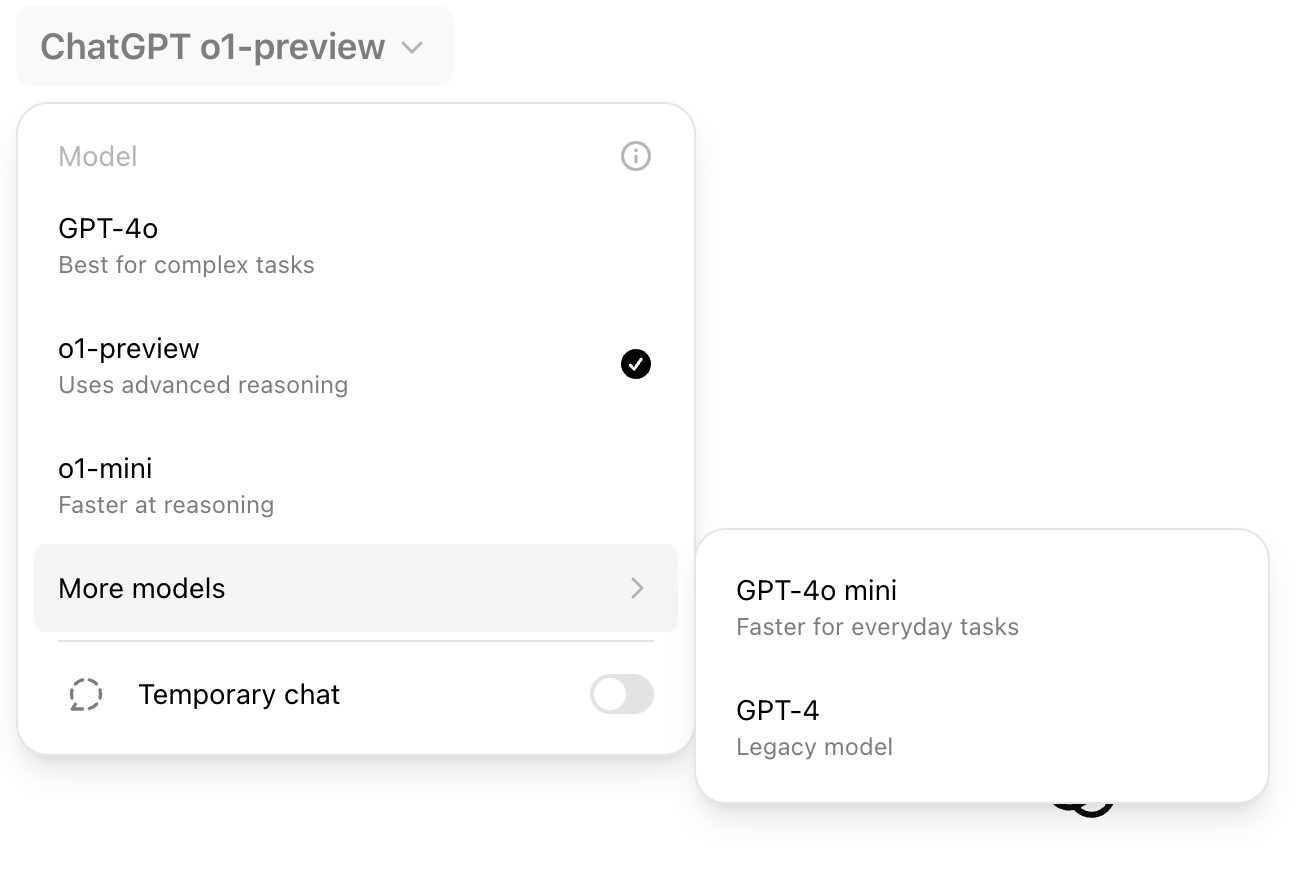

Yeah, I’m not so sure about that. As a paying ChatGPT user, I got access to ‘o1’ yesterday and it’s arguably more confusing than ever in terms of how to use the service. The drop-down menu to pick which model you wish to use now features a sub-menu to show all of the options. It’s starting to feel Microsoftian…

Maybe that was a stipulation of the tech giant participating in the new round of funding? I kid, I kid, but also sub-menus in drop-down menus!

For OpenAI, o1 represents a step toward its broader goal of human-like artificial intelligence. More practically, it does a better job at writing code and solving multistep problems than previous models. But it’s also more expensive and slower to use than GPT-4o. OpenAI is calling this release of o1 a “preview” to emphasize how nascent it is.

Again, this creates a bit of user confusion for what model to use, when. And the naming scheme does not help — “oh yeah, you have to use ‘01’ for that, not ‘4o’.” What? That’s also a potentially expensive question:

ChatGPT Plus and Team users get access to both o1-preview and o1-mini starting today, while Enterprise and Edu users will get access early next week. OpenAI says it plans to bring o1-mini access to all the free users of ChatGPT but hasn’t set a release date yet. Developer access to o1 is really expensive: In the API, o1-preview is $15 per 1 million input tokens, or chunks of text parsed by the model, and $60 per 1 million output tokens. For comparison, GPT-4o costs $5 per 1 million input tokens and $15 per 1 million output tokens.

On the other hand, it’s nice that paying ChatGPT users got access without being upsold, as had been rumored.

It’s sort of strange that OpenAI wouldn’t let Robison play around with the new model herself, and instead she was given a walk-through of capabilities — especially since, again, they rolled it out shortly thereafter, fast. Unlike, say, GPT-4o voice, which still isn’t available to most users, months after OpenAI very publicly previewed it.

The model buffered for 30 seconds and then delivered a correct answer. OpenAI has designed the interface to show the reasoning steps as the model thinks. What’s striking to me isn’t that it showed its work — GPT-4o can do that if prompted — but how deliberately o1 appeared to mimic human-like thought. Phrases like “I’m curious about,” “I’m thinking through,” and “Ok, let me see” created a step-by-step illusion of thinking.

But this model isn’t thinking, and it’s certainly not human. So, why design it to seem like it is?

OpenAI doesn’t believe in equating AI model thinking with human thinking, according to Tworek. But the interface is meant to show how the model spends more time processing and diving deeper into solving problems, he says. “There are ways in which it feels more human than prior models.

I actually like this element of it — again, it humanizes the technology. In a way that yes, will make many feel uncomfortable. But it also may help some feel a bit better about the outputs — that the computer took some time to reason its way through what was asked of it versus spitting out an answer instantly to make it seem awfully confident in the output. These are interesting problems/questions!