The Great AI UI Unification

He just... tweeted it out. I had this whole rant ready to go in my head about the UX state of the various AI tools – and ChatGPT in particular, since it's the most popular and most egregious – when Sam Altman pre-empted the post with his tweet a couple days ago:

We want to do a better job of sharing our intended roadmap, and a much better job simplifying our product offerings.

We want AI to “just work” for you; we realize how complicated our model and product offerings have gotten.

We hate the model picker as much as you do and want to return to magic unified intelligence.

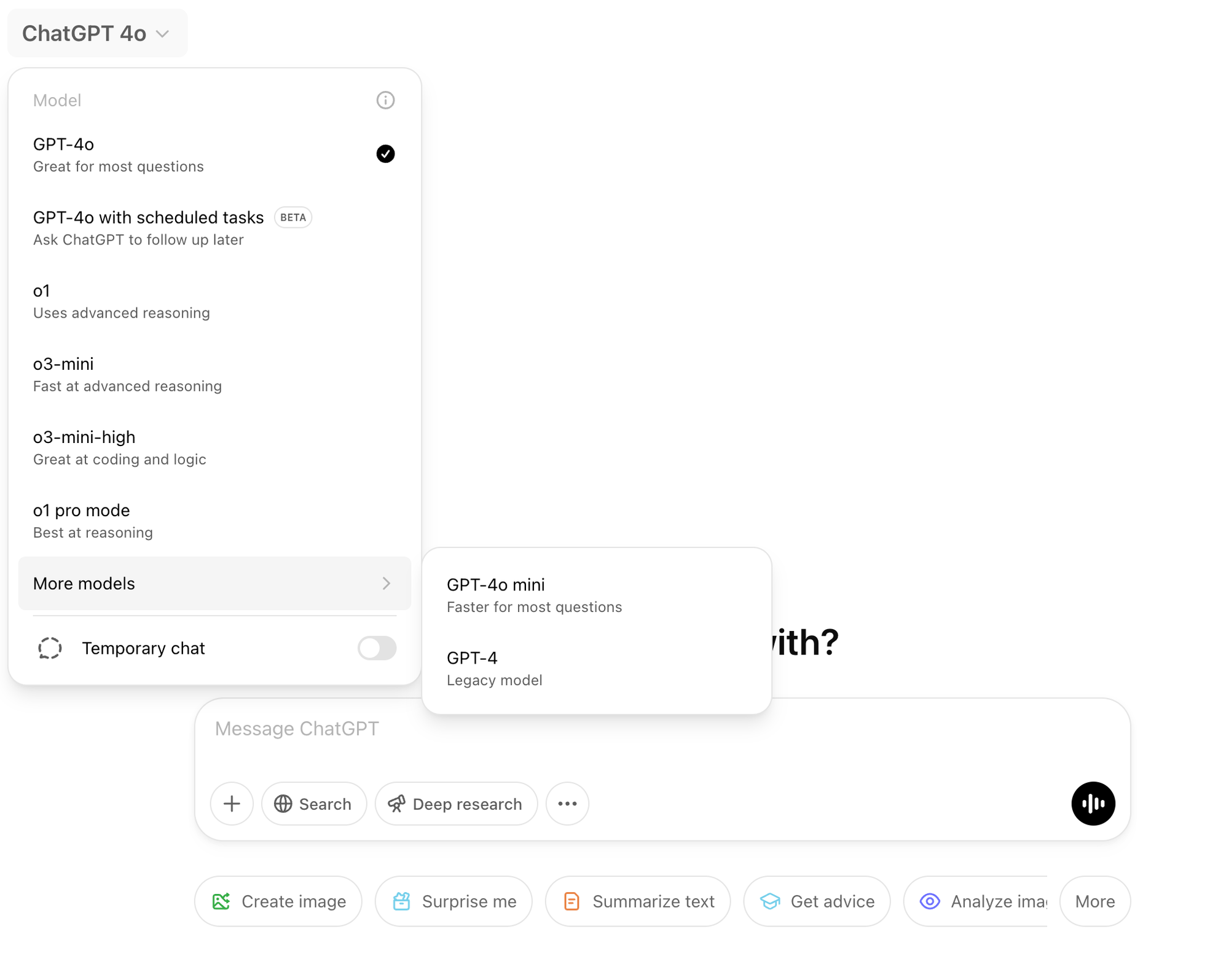

This is, of course, great news. I've been making fun of the model picker drop-down since last year when o1 (aka "Strawberry" – the codenames remain much better than the actual names, fight me) took things to another level, literally. OpenAI added a sub-menu to ChatGPT's drop-down, giving them room to give you five different model options to choose from. As I wrote at the time:

As a paying ChatGPT user, I got access to ‘o1’ yesterday and it’s arguably more confusing than ever in terms of how to use the service. The drop-down menu to pick which model you wish to use now features a sub-menu to show all of the options. It’s starting to feel Microsoftian…

Well, now we're up to eight options – six in the main drop-down and still those same two "left-overs" in the sub-menu. And technically it's nine options if you include the "Temporary chat" toggle.

And depending on which model you choose to use, you get other options from there, as various buttons will be enabled in the message box for 'Web Search' and now 'Deep Research'. Oh and I forgot the '+' button, which has more options depending on where you're using it. And, of course, there's the '...' button in this box too, giving you a sub-menu for the 'Image' or 'Canvas' options. Oh yes, and Voice Mode. (And voice transcription on certain devices.)

Forget Microsoftian, it's downright Gordian!

It was obviously untenable, and OpenAI knew that, and saw all the jokes. And so now they're finally cleaning it up. But importantly, the clean-up job isn't just shoving all the options in yet more sub-menus, it's seemingly about changing some pretty fundamental elements of how ChatGPT operates. Design, in this case, really is how it works.

Altman goes on to give a few high-level updates on GPT-4.5 (aka "Orion"), which is "weeks" away, and even GPT-5, which is "months" away. Notably, GPT-4.5 will be their last "non-chain-of-thought model". This, of course, is in response to DeepSeek.1

Somewhat related, the real breakthrough of DeepSeek, the product, may end up being the notion that surfacing chain-of-thought work to end-users helps to instill a deeper level of trust in the outputs. And everyone from OpenAI on down is now scrambling to add more such visibility to their products.

It was probably too late to add this into GPT-4.5, but it sounds like GPT-5 will be chain-of-thought-ready. The real question is if this also points to a world where that model is open source.2 That would be truly massive news in the wake of DeepSeek. And there are signs indicating it may happen. And others are moving in that direction...

But back to that drop-down and back to Altman:

After that, a top goal for us is to unify o-series models and GPT-series models by creating systems that can use all our tools, know when to think for a long time or not, and generally be useful for a very wide range of tasks.

In both ChatGPT and our API, we will release GPT-5 as a system that integrates a lot of our technology, including o3. We will no longer ship o3 as a standalone model.

Imagine this: an input box with no options. You simply type something and ChatGPT figures out your intent. Insert the joke about needing to ask ChatGPT which ChatGPT model to use – except, really! That's how it should more or less work, albeit mostly transparently (unless you want to see the chain-of-thought!) to users. I mean, obviously there will be some options, but those will be more meant for power users, as is the case with many tools.

The timing of this revelation was also interesting in light of a new report by Stephanie Palazzolo for The Information revealing that the reason why Anthropic has been so slow to release a reasoning model is because they're aiming to take the same general unified approach that Altman outlined above. And it's coming soon. Model-pickers are so 2024. 2025 is going to be all about the model picking itself.

In that way, I'm reminded of Google. It's easy to forget now, but one of the key aspects of the actual product was the spartan design. It was a search box. That's it. None of the noise that Yahoo had. None of the cruft of AltaVista. Just a name and a search box. And that's largely still true 25 years later! There are options, but mainly after you let Google try to sort it out for you.

In fact, I think one of the key understated elements of Google throughout the years is just how good it is at figuring out your intent. It's honestly a better spell checker than spell checkers are. You can type something that's borderline gibberish and Google will usually figure it out. That type of intangible breeds user trust and usage. ChatGPT is actually quite good at it too, so they should just remove some of the creeping cruft.

And now they are.

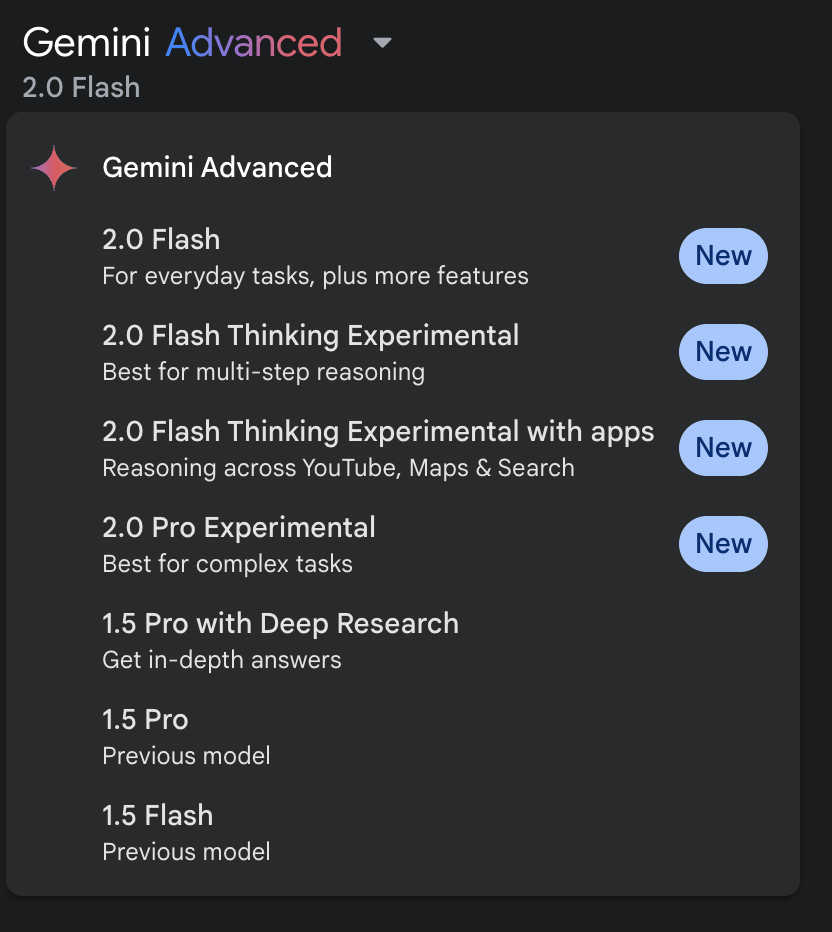

You know who else should do this? Google! Gemini currently has seven options in their model picker drop-down.3 To be fair, they don't have sub-menus, but what they lack there, they make up for with garish "New" glyphs. And as esoteric as OpenAI's model names may be, that's how literal – and, as such, literally bad – Google's are. Looking at you, '2.0 Flash Thinking Experimental with apps'.4

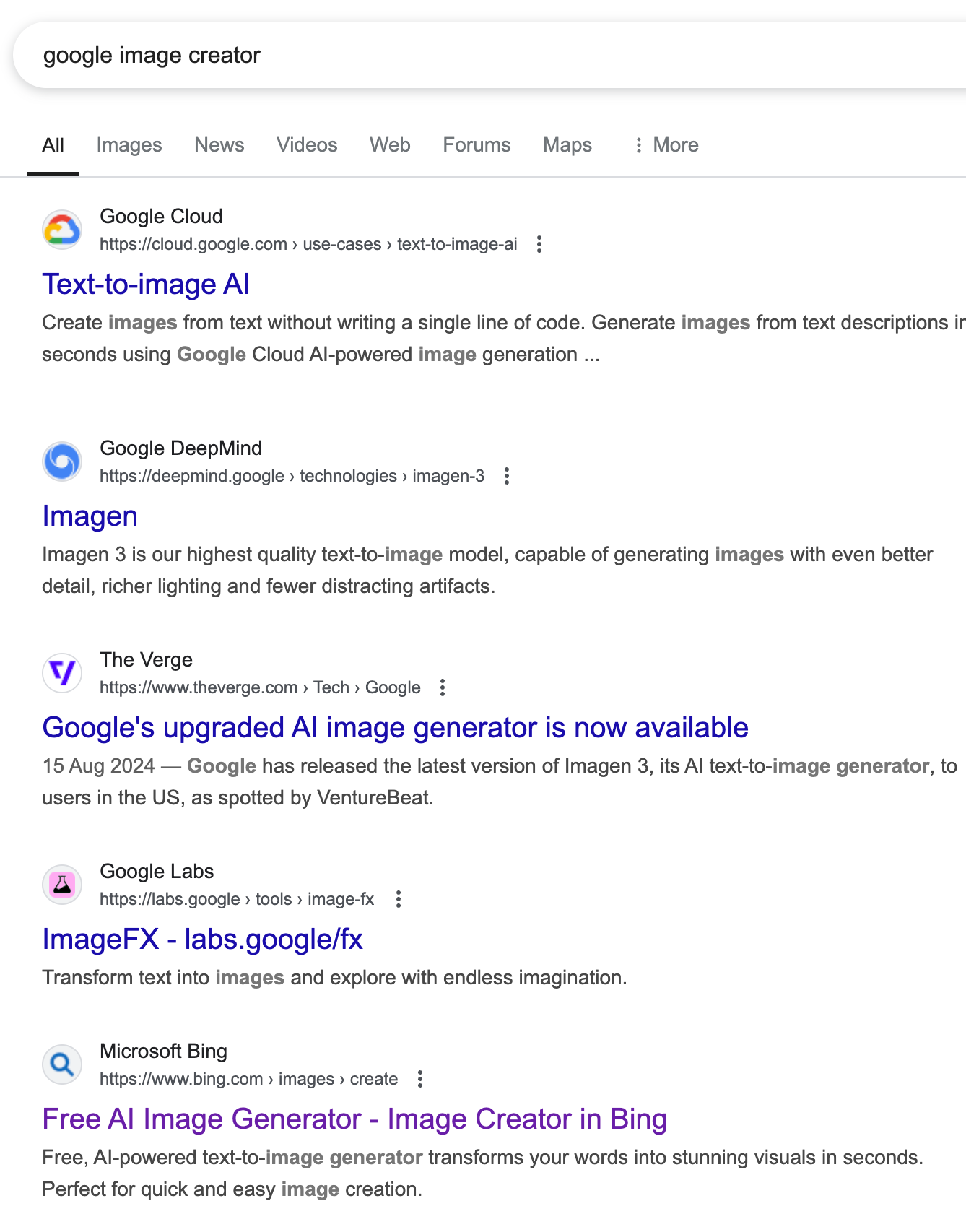

Google arguably has more pressing issues than their drop-downs in that I doubt most people could find where, say, their AI image creation tool is – let alone the video one, which they undoubtedly can't use anyway despite it being "unveiled". They're all on various sub-sites. But not on Gemini's domain, which itself is a sub-site of Google, because they don't own Gemini.com – because, of course, the crypto company of that name does – which seems like it could be a problem at some point.5

Anyway, just trying Googling "Google image creator" and you'll get some fun results. 'Imagen' is not number one because Google Cloud's 'Text-to-image AI' seemingly has more juice. Imagen is buried as a sub-site on Google's DeepMind sub-site. That site tells you that you can use it in Gemini (which is not obvious, but how it should be – and zero of the top results mention Gemini) or that you can try it on ImageFX, yet another sub-site of yet another sub-site: this time, Google Labs. Why? Nobody knows.

Anyway, there's a lot to clean up in AI product land. I'm glad that it's starting to happen as it's a mess right now. And the "normals" are coming. Fast.

1 It's certainly plausible and perhaps even probable that OpenAI had this in the works before DeepSeek, but without question, they had to move up timelines to ship any chain-of-thought work sooner. And they're hardly alone there.

2 Which undoubtedly will really mean "open weight", if anything.

3 Though it changes based on if you're a paid "Advanced" user or not.

4 While this also follows some of DeepSeek's chain-of-thought UI revelations, to be fair to Google, they did start moving in this direction in December.

5 Still, the name beats Bard. A brand which lasted exactly 10 months.