Apple Fails to Overreact to the AI Revolution

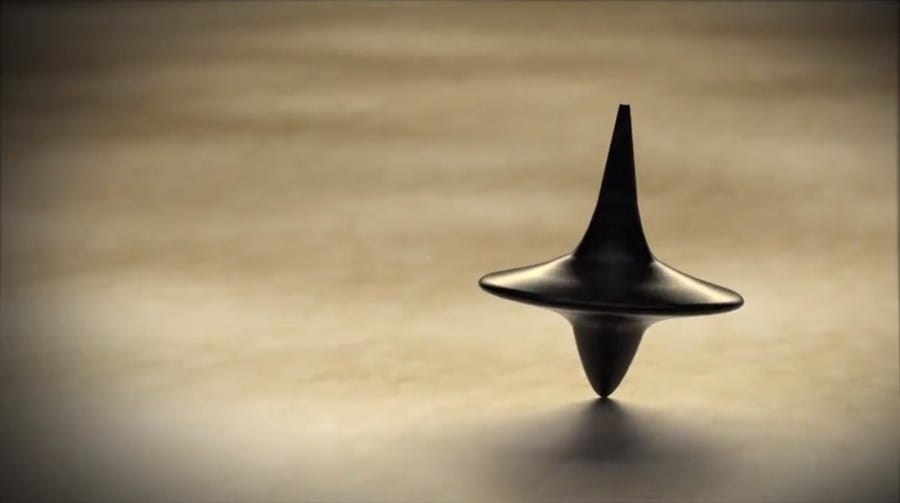

This was a first: writing the title for a podcast on which I was a guest. But as you'll hear in this episode of the Big Technology Podcast with Alex Kantrowitz, I was thinking about this as a working title for one of two pieces I was going to write after the WWDC keynote. Instead, I went with the more grandiose: Did Apple Just End AI's First Era? (The other: The Voice Assistant Who Cried Wolf.)

Alex and I talk about the ideas in those posts and a lot more surrounding 'Apple Intelligence'. Including, of course, Wall Street's reaction to the news – which started out muted, at best, and now has seemingly catapulted Apple back into the pole position as the most valuable company in the world. Microsoft, of course, cannot be happy, about that, about Apple's Open AI deal, about a lot of things. They'll have to regroup and respond.

Anyway, I'm obviously biased, but I thought this conversation turned out well. And Apple's actual announcements also put the podcast Alex & I recorded back in April in a good light. The focus there:

While it had been all quiet on the LLM front for quite some time, Apple's release of eight (!) models of varying sizes to the open source community on Hugging Face (here's the white paper) would seem to indicate that this will be a big part of their approach to the space announced at WWDC in a few weeks. That is, Apple will focus their own AI efforts on things that can be done on device, while they will perhaps outsource other AI elements in the cloud to third parties.

The only thing slightly off there is that while Apple is "outsourcing" some AI elements to OpenAI (and likely others, down the road), they've also built out their own cloud, completely with Apple Silicon, to process AI tasks which require more compute than their on-device models can handle. In the weeks following this April podcast, I spent way too much time trying to think how OpenAI could underpin a new Siri, amongst other things. Again, this was over-thinking it. Apple developed these models for a reason: they're using them. The fallback option is ChatGPT.

This imagining of the experience ended up being pretty close (beyond the voice change – at least for now!):

So is it just some pop-up you see once or every-so-often after you opt-in to using ChatGPT (or any other third-party service)?

One thing that might be fun from a product perspective is to maintain the Siri voice (of which there are a number of them now, of course) for anything Apple serves up, and have another voice for anything from OpenAI. It's probably too subtle, and the whole Scarlett Johansson debacle has sort of cast a pall over these voices for now, but imagine Siri saying something like: "Hmm, I'm not sure about that one. Do you want me to ask my friend, Sky?"

Though Apple may insist on using 'ChatGPT' instead of 'Sky' (and certainly not 'Sky' now, sadly) to make it very clear which entity is serving up the information to come. And that would also help OpenAI's brand halo with ChatGPT – or hurt it depending on the result!